A Brief History of Containers

02 Apr 2020 - Richard Horridge

This post is a summary of the talk I gave at Docker Birmingham March 2020 - if you are interested in Docker or container / cloud technologies in general I would encourage you to come along!

Introduction

Why do we want to use containers? They can provide a reproducible environment for running our applications, ensuring that they behave the same way in development as in production. Containers can handle applications with differing versions of libraries, which may otherwise be difficult to install on the same system. Containers also allow independent packages of software to run in isolation from others, which allows a software project to be considered as a number of units rather than as a monolith.

Shared and Static Libraries

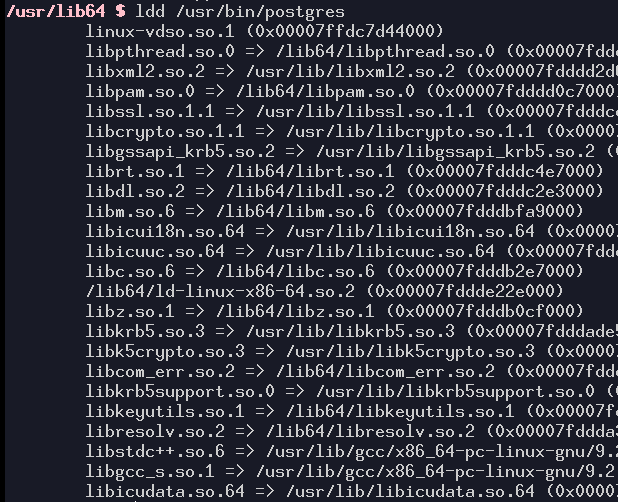

Libraries that the postgres binary depends upon

As an example, consider dynamic libraries. Each of these files contains functions that can be used by many different applications. However, different applications may depend on different versions of a library and installing them together may not work well.

- ICU is for Unicode

- LibC is fairly self-explanatory

- libstdc++ - C++ standard library

- Libresolv is for DNS queries

- libssl / libcrypto

- libxml2

- libpam for authentication

- librt - POSIX realtime extension

- libdl - dynamic linking (mostly unused as it is in libc)

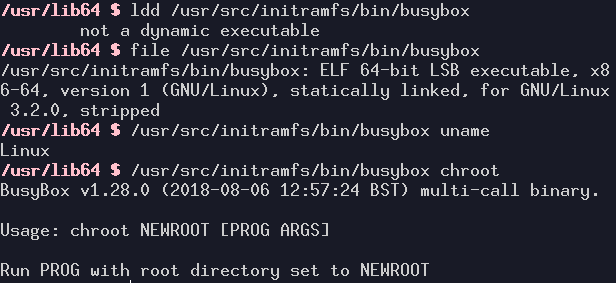

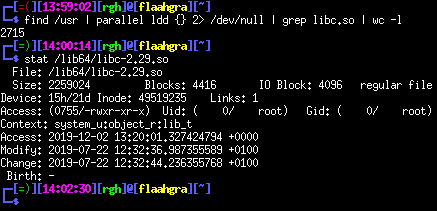

A statically-linked executable

All library functions can be included in the executable file through static linking. This removes any issues with different library versions!

Lots of duplications!

As you can see, a statically linked binary is quite large! If every application was statically linked, they would take up a huge amount of space on a system.

Back to the 1950s / 60s

The processor unit of the IBM System/360 Model 30 (© ArnoldReinhold CC-BY-SA-3.0)

At the time, business computing was dominated by IBM. System/360 was notable for making 8-bit bytes the standard (there were plenty of machines around at the time, with the PDP-10 having any number of bits from 1 to 36 and the CDC 6600 having 60!). Computer programs were entered on punched cards and processed in batches.

An IBM zSeries 800 (2006) (public domain)

Virtualisation formed part of the system firmware, and allowed many virtual machines which were indistinguishable from the parent architecture. Originally implemented in 1967 with the CP-40, it exists to this day (current version is z/VM7.1). This virtualisation technology is intrinsically tied to the mainframe architecture.

These systems were and are baremetal hypervisors, allowing different operating system kernels to be run. Today, they typically run thousands of virtual machine instances.

Unix

Ken Thompson and Dennis Ritchie, creators of Unix (public domain)

Ken Thompson and Dennis Ritchie working at a PDP-11 machine (© Magnus Manske CC-BY-SA-2.0)

Full virtualisation was all that was possible for some time. MIT, Bell Labs and General Electric were working on Multics, a time-sharing operating system. Individual researchers got fed up with the project and began work on a new operating system. This was Unix, a networked, multi-user operating system for the PDP-11.

There was no further progress on virtualisation until 1979. Thechroot system call was introduced in Unix Version 7

[1]

.

It changes the apparent system root directory

[2]

,

allowing `virtualisation' of a system without use of a hypervisor.

Processes started in the chroot can only see that environment.

The chroot system call allows a new root directory to be

specified.

It can be really useful for checking dependencies of packages -

Debian uses a chroot environment to avoid breaking developer

systems.

There are, however numerous flaws of chroot.

It requires the user to be root (which is generally a bad thing!).

The directory structure of the chroot needs to be set up.

By default there is no access to system files (which are

important!) - no devices (USB, network) or access to any host

files (graphics, audio). The solution to this is bind mounting.

Isolation is not complete and it is still possible to escape as the root user! If your process needs to run as root, you need a better solution - namespaces [3] and control groups [4] .

This C source code is the original implementation of chroot in

Unix Version 7. It could be escaped simply by using cd ..!

chdir() { chdirec(&u.u_cdir); } chroot() { if (suser()) chdirec(&u.u_rdir); } chdirec(ipp) register struct inode **ipp; { [...]

FreeBSD Logo

FreeBSD jails first appeared in 2000 and are built upon chroot

[5]

,

[6]

.

They virtualise access to the file system, system users and

networking.

Each jail has its own set of users and its own root

account. These users are limited to the environment of the jail.

Jails allow an entire operating system to be virtualised with

minimal overhead.

Sun Ultra 24 from Sun Microsystems (© Jainux CC-BY-SA-3.0)

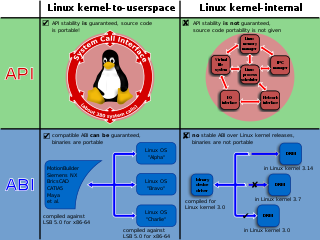

Sun Microsystems developed their own operating system level virtualisation technology for Solaris. The use of the word `container' was changed to `zone' to differentiate it from emerging application level virtualisation. An interesting feature of zones is the ability to translate system calls, allowing backwards compatibility with earlier versions of Solaris. This is similar to IBM's approach, which always ensures backwards compatibility with earlier systems. The Linux kernel, on the other hand, has a policy never to break user applications but this does not apply to system calls. As such, there are many older ker©nel versions still under development.

Linux

Illustration of Linux Kernel interfaces (© ScotXW CC-BY-SA-3.0)

Linux Namespaces (© Xmodulo CC-BY-2.0)

Namespaces allow separate network stacks, mount points and other system resources to be visible to different containers. The introduction of user namespaces allows containers to be run as a non-root user, which is a very good thing for security!

Linux Containers Logo

LXC aims to create an environment as similar to a standard Linux-based operating system without a separate kernel It makes use of numerous kernel features, including namespaces, control groups and chroots, and it can be useful if an operating system container is required.

Docker

Docker was initially released in 2013.

Originally using LXC as the container engine, it moved to a Go

runtime (runc).

Docker builds containers in stages based on a declarative syntax.

Stages of build means that common elements are reused between containers and don't need to be duplicated. Many containers can derive from a common ancestor and don't need new copies of libraries. The Dockerfile format sets out a sequence of steps to perform to produce a container - these steps are independent of the environment that Docker is running in.

Kubernetes

Docker Swarm

Many different technologies are now built on top of or around Docker and containers. If you're interested in learning more about any of these, there are plenty of people in the room that use these tools and you will no doubt hear more about them at future Docker or CNCF meetings!